Over one dozen scores assessing our personal health are being mashed up, many using our digital data exhaust left on conversations scraped from Facebook and Twitter, via our digital tracking devices from Fitbit and Jawbone, retail shopping receipts, geo-location data created by our mobile phones, and publicly available data bases, along with any number of bits and pieces about ‘us’ we (passively) generate going about our days.

Over one dozen scores assessing our personal health are being mashed up, many using our digital data exhaust left on conversations scraped from Facebook and Twitter, via our digital tracking devices from Fitbit and Jawbone, retail shopping receipts, geo-location data created by our mobile phones, and publicly available data bases, along with any number of bits and pieces about ‘us’ we (passively) generate going about our days.

Welcome to The Scoring of America: How Secret Consumer Scores Threaten Your Privacy and Your Future.

Pam Dixon and Robert Gellman wrote this well-documented report, published April 2, 2014 by The World Privacy Forum. The report covers the landscape of different scoring categories and methodologies used to paint pictures of consumers through different lenses and industry view: financial, energy utilization (“green scores”), fraud, credit, social clout, and more granular indices such as tendency toward charitable giving and a pregnancy predictor.

And did I mention health?

Dixon and Gellman point to more than a dozen consumer health score algorithms, some well-known in the industry such as the SF-36 form gauging physical and mental health, and the RUG (resource utilization group) score predictive of nursing home residents’ projected use of resource needs. When these two scores are used by health providers, they would be covered under HIPAA privacy and security rules.

However, there are other health scores that live in the shadows: ever heard of the FICO medication adherence score, or the One Health Score? You’ll learn about these and other consumer scores in this important report. The health care industry has many lessons to learn from the financial and retail markets which are well-ahead of health care in slicing and dicing peoples’ behavioral data for marketing and managing their businesses and bottom-lines.

Health Populi’s Hot Points: In this era of Big Data and analytics in health, those organizations going at-risk in health care will be motivated to use lots of data to help manage that risk while providing health care and maintaining organizational fiscal health. Dixon and Gellman provide a long (long) list of data points that are available on consumer behaviors (from collecting antiques and carrier of mobile phone to Bible purchasing and sexual orientation) that can be mashed up in algorithms (mathematical models) to segment people, target people, market to people….ruling people in, and ruling people out.

There are two key points to be mindful of:

- The potential for discrimination in the use of “dark data” in less-than-transparent algorithms and business models; and,

- The central role of the consumer in becoming mindful in the use of social media, digital health devices, and daily creation of “data exhaust” that can be used by third parties not covered by HIPAA and other consumer privacy laws.

The laws that protect Americans from health privacy risks are sewn (quite loosely) into a patchwork quilt that can fragment at the state level. A handful of U.S. states have strong data protections (among them, California and Hawaii).

But we have already entered the era where consumers — especially in the U.S., which lacks a national overall right to privacy and ownership of personal data as (health) citizens in the EU enjoy — must pay much more attention to how their user-generated data is getting used by third parties. There are technologies that people can lay on top of their apps, like Ghostery, Lightbeam, and Privowny, among them.

As we’ve learned to dissect and repair our FICO scores for credit to buy houses and cars, consumers must figure out how to do the same with personal information that’s falling through social media and geo-location holes in their illusory privacy fabric.

I’ll be covering these issues in my next paper for California HealthCare Foundation due out in June. Stay tuned for a deep dive into the thorny challenge of balancing privacy for the N of 1 and health for the good of many.

I'm in amazing company here with other #digitalhealth innovators, thinkers and doers. Thank you to Cristian Cortez Fernandez and Zallud for this recognition; I'm grateful.

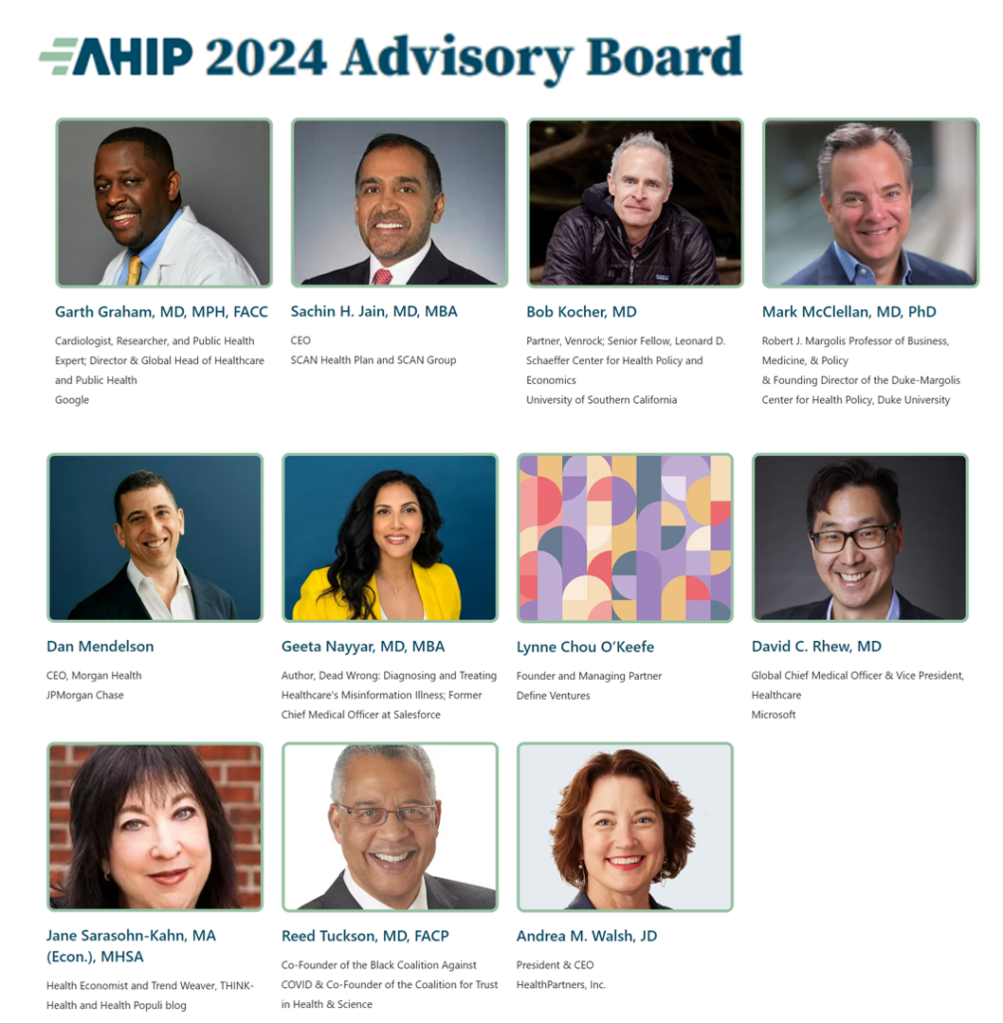

I'm in amazing company here with other #digitalhealth innovators, thinkers and doers. Thank you to Cristian Cortez Fernandez and Zallud for this recognition; I'm grateful. Jane was named as a member of the AHIP 2024 Advisory Board, joining some valued colleagues to prepare for the challenges and opportunities facing health plans, systems, and other industry stakeholders.

Jane was named as a member of the AHIP 2024 Advisory Board, joining some valued colleagues to prepare for the challenges and opportunities facing health plans, systems, and other industry stakeholders.  Join Jane at AHIP's annual meeting in Las Vegas: I'll be speaking, moderating a panel, and providing thought leadership on health consumers and bolstering equity, empowerment, and self-care.

Join Jane at AHIP's annual meeting in Las Vegas: I'll be speaking, moderating a panel, and providing thought leadership on health consumers and bolstering equity, empowerment, and self-care.