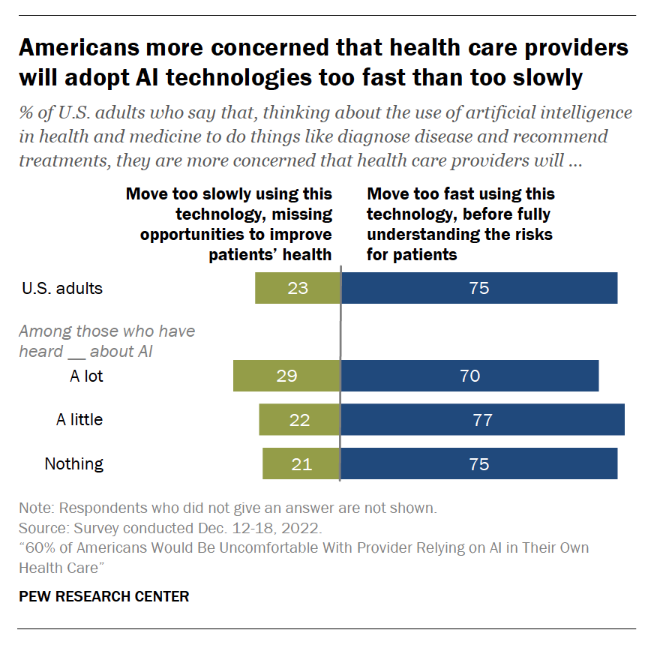

Most U.S. health citizens think AI is being adopted in American health care too quickly, feeling “significant discomfort…with the idea of AI being used in their own health care,” according to consumer studies from the Pew Research Center.

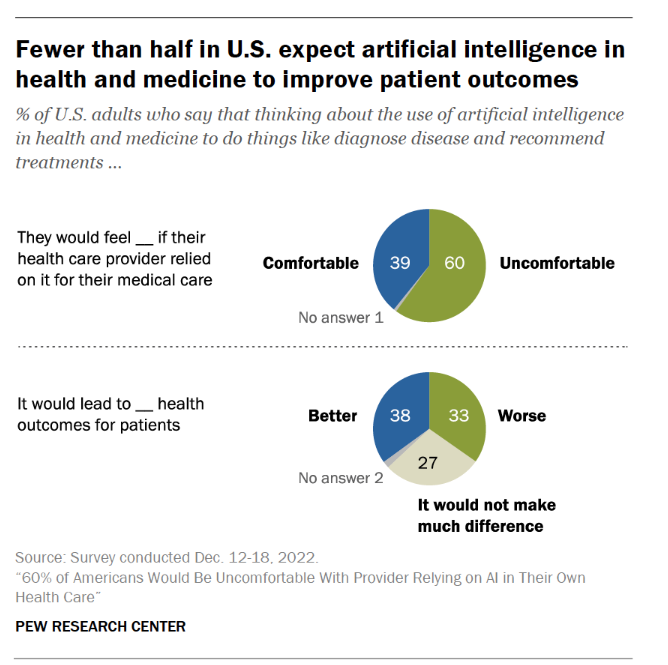

The top-line is that 60% of Americans would be uncomfortable with [their health] provider relying on AI in their own care, found in a consumer poll fielded in December 2022 among over11,000 U.S. adults.

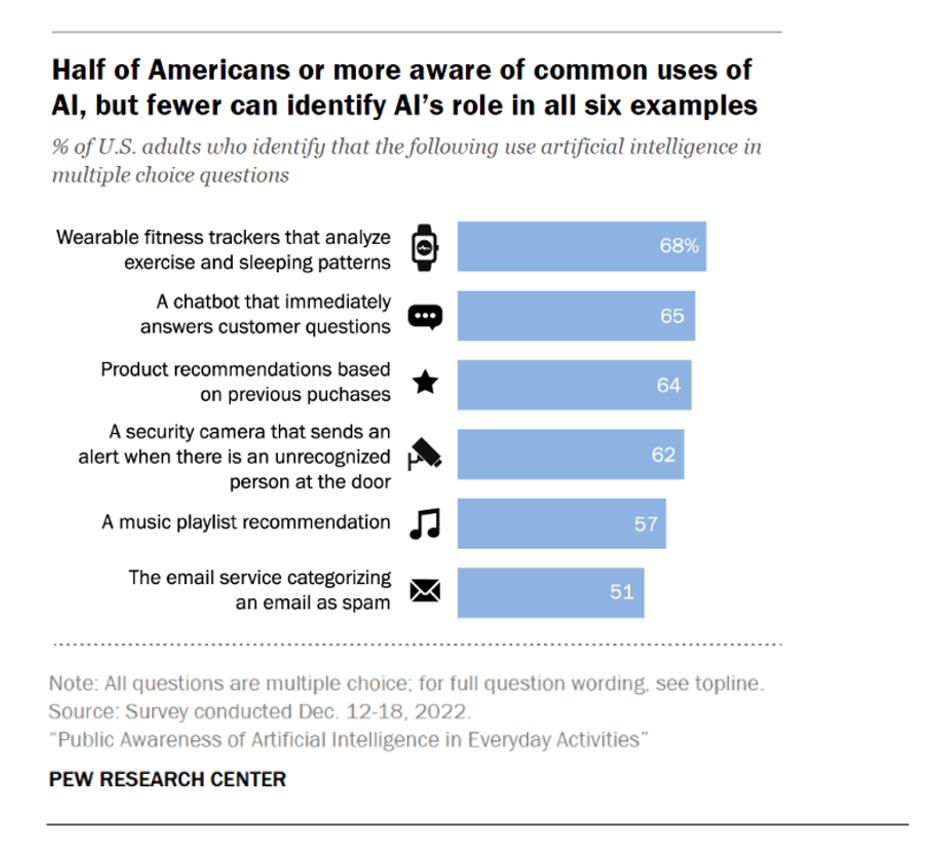

Most consumers who are aware of common uses of AI know about wearable fitness trackers that can analyze exercise and sleep patterns, as a top health/wellness application for the technology. Other commonly-known examples of AI in daily living included chatbots that respond to consumers’ questions, product recommendations based on previous purchases, and security cameras that send alerts when they “see” an unrecognized person at the door.

Pew Research Center has published several analyses based on the data collected in the December 2022 consumer survey. I’ll focus on a few of the health/care data points here, but I recommend your reviewing the entire set of reports to get to know how U.S. consumers are looking at AI in daily life.

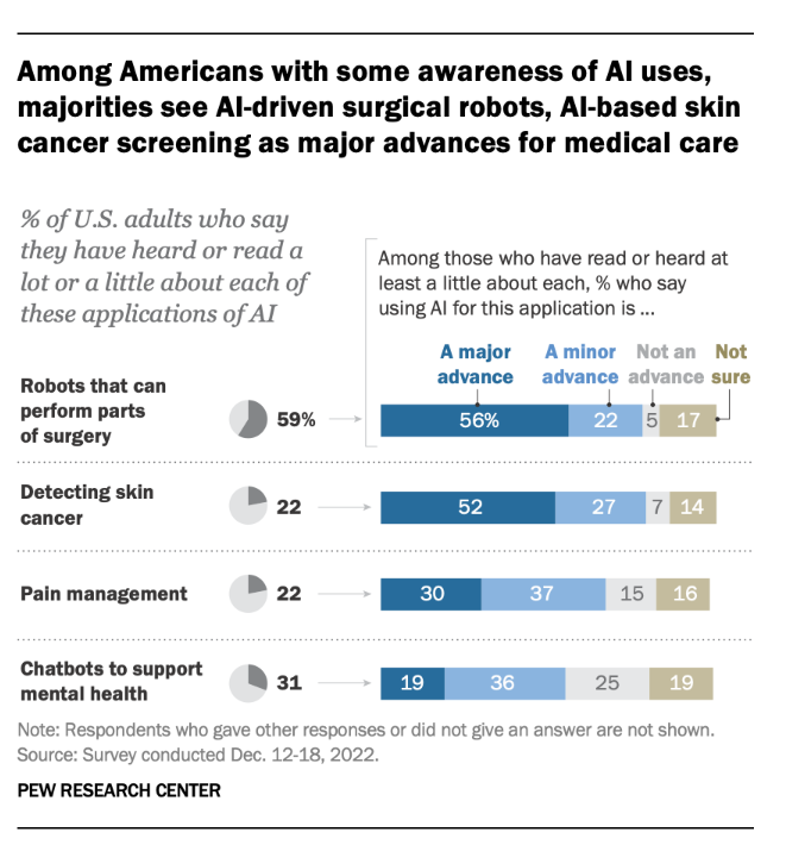

Among U.S. consumers who are aware of AI applications, over one-half view robots that can perform surgery as well as AI tools that can detect skin cancer represent major advances in medical care.

For pain management and chatbots for mental health? Not so much major advancements as minor ones which one-third of consumers perceive.

For mental health, 46% of consumers thought AI chatbots should only be used by people that are also seeing a therapist. This opinion was held by roughly the same proportion of people who had heard about AI chatbots for mental health as those who did not know about the use of AI chatbots for mental health.

Less than half of people expect AI in health and medicine to improve patient outcomes. Note that one-third of people expect AI to lead to worse health outcomes.

Medical errors continue to challenge American health care. About 4 in 10 people view the potential for AI to reduce the number of mistakes made by health care providers as well as the opportunity for AI to address health care biases and health equity.

This is an important opportunity for AI applications to address in U.S. healthcare: two in three Black adults say bias based on patients’ race or ethnicity continues to be a major problem in health and medicine, with an additional one-quarter citing bias as a minor problem, found in the Pew study.

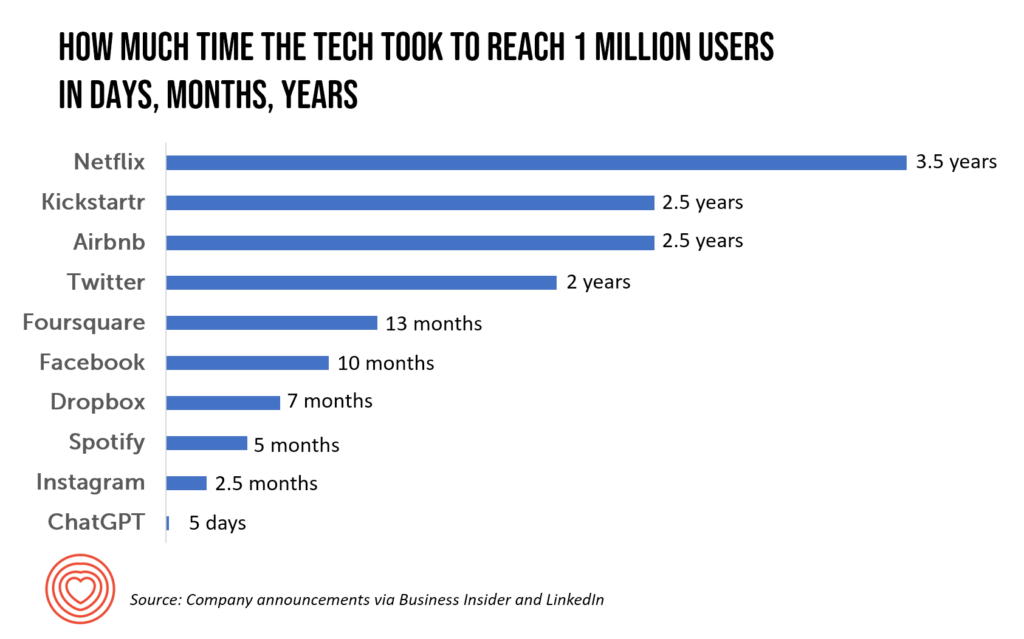

Health Populi’s Hot Points: As industry continues to move faster and faster toward AI, and currently the hockey-stick S-curve of ChatGPT adoption, mass media are covering the issue to help consumers keep up with the technology. USA Today published a well-balanced and -researched piece titled “ChatGPT is poised to upend medical information. For better and worse.”

In her coverage, Karen Weintraub reminds us: “ChatGPT launched its research preview on a Monday in December [2022]. By that Wednesday, it reportedly already had 1 million users. In February, both Microsoft and Google announced plans to include AI programs similar to ChatGPT in their search engines.”

Dr. Ateev Mehrotra of Harvard and Beth Israel Deaconess, always a force of good data and evidence-based healthcare, added, “The best thing we can do for patients and the general public is (say), ‘hey, this may be a useful resource, it has a lot of useful information — but it often will make a mistake and don’t act on this information only in your decision-making process.”

And Dr. John Halamka, now heading up the Mayo Clinic Platform, concluded that, while AI and ChatGPT won’t replace them, “doctors who use AI will probably replace doctors who don’t use AI.” Dr. Halamka also recently discussed AI and ChatGPT developments in health care on this AMA Update webcast.

From the patient’s point of view, check out Michael L. Millenson’s column in Forbes discussing how in cancer, AI can empower patients and change their relationships with physicians and the care system.

A recent essay in The Conversation, co-written by a medical ethicist, explored a range of ethical issues that Chat-GPT’s adoption in health care could present. Privacy breaches of patient data, erosion of patient trust, how to accurately generate evidence of the technology’s usefulness, and assuring safety in heath care delivery are among the risks the authors call out, as well as the potential of further entrenching a digital divide and health disparities.

Vox published a piece this week arguing for slowing down the adoption of AI. Sigal Samuel asserted, “Pumping the brakes on artificial intelligence could be the best thing we ever do for humanity.” He argues that, “Progress in artificial intelligence has been moving so unbelievably fast lately that the question is becoming unavoidable: How long until AI dominates our world to the point where we’re answering to it rather than it answering to us?”

Sigal de-bunks three objections AI proponents raise in this bullish, go-go nascent phase of adoption:

- Objection 1: “Technological progress is inevitable, and trying to slow it down is futile”

- Objection 2: “We don’t want to lose an AI arms race with China,” and,

- Objection 3: “We need to play with advanced AI to figure out how to make advanced AI safe.”

Yesterday, Microsoft and Nuance announced their automated clinical documentation tool embedded with GPT-4 and the “conversational” (i.e., chat) model.

Given the fast-paced adoption of AI in medical care, and ChatGPT as a use case, we will all be impacted by this technology as patients and caregivers, and workers in the health/care ecosystem. It behooves us all to stay current, stay honest and transparent, and stay open to learning what works and what doesn’t. And to ensure trust between patients, clinicians and the larger health care system, we must operate with a sense of privacy- and equity-by-design, respecting peoples’ sense of values and value, and act with transparency and respect for all.

In hopeful mode, Dr. John Halamka concluded his conversation on the AMA Update with this optimistic view: “So how about this—if in my generation, we can take out 50% of the burden, the next generation will have a joy in practice.”

Concluding this discussion for now, wishing you joy for your work- and life-flows!

Interviewed live on BNN Bloomberg (Canada) on the market for GLP-1 drugs for weight loss and their impact on both the health care system and consumer goods and services -- notably, food, nutrition, retail health, gyms, and other sectors.

Interviewed live on BNN Bloomberg (Canada) on the market for GLP-1 drugs for weight loss and their impact on both the health care system and consumer goods and services -- notably, food, nutrition, retail health, gyms, and other sectors. Thank you, Feedspot, for

Thank you, Feedspot, for  As you may know, I have been splitting work- and living-time between the U.S. and the E.U., most recently living in and working from Brussels. In the month of September 2024, I'll be splitting time between London and other parts of the U.K., and Italy where I'll be working with clients on consumer health, self-care and home care focused on food-as-medicine, digital health, business and scenario planning for the future...

As you may know, I have been splitting work- and living-time between the U.S. and the E.U., most recently living in and working from Brussels. In the month of September 2024, I'll be splitting time between London and other parts of the U.K., and Italy where I'll be working with clients on consumer health, self-care and home care focused on food-as-medicine, digital health, business and scenario planning for the future...