Patients’ comfort in artificial intelligence is linked to familiarity with the technology, a consumer survey from GlobalData learned. Among patients unfamiliar with AI, 42% are uncomfortable, and another 50% feel neither comfortable nor uncomfortable with the technology. However, among patients familiar with AI, 60% feel comfortable with visiting a medical practice that uses AI.

Welcome to the new health literacy challenge the health care sector will have to deal with, and soon: lack of patients’ awareness of AI, its promises and pitfalls.

“It is imperative to prioritize patient education regarding this technology,” Urte Jakimaviciute of GlobalData asserted based on the study findings. “This education should aim to enhance comprehension of AI’s utilization, its potential advantages, and associated adoption risks, ultimately fostering increased trust in AI.”

Enhanced knowledge empowers individuals to make informed decisions and mitigate biases linked to AI, Jakimaviciute believes.

GlobalData conducted this consumer survey between July and August 2023 among U.S. health citizens along with adults living in other nations. ![]()

We can look at a new study from PYMNTS Intelligence in collaboration with AI-ID for more evidence that U.S. health consumers could benefit from more education about AI’s role in health care.

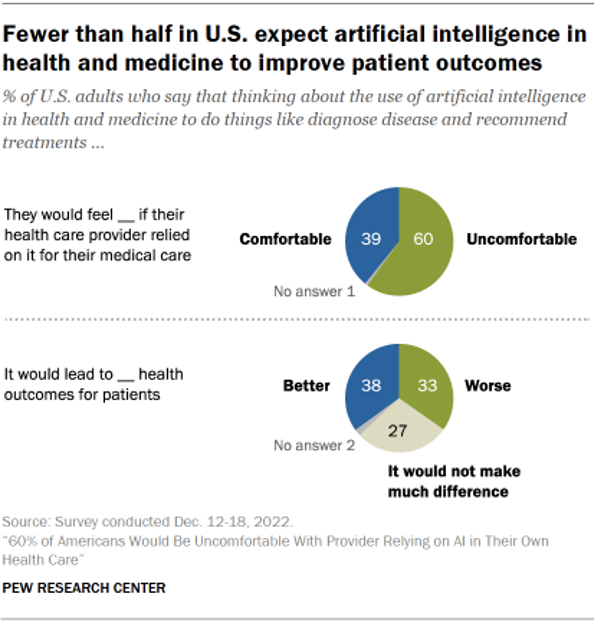

The Generative AI Tracker study from PYMNTS Intelligence and AI-ID found that 57% of Americans who believe using AI to diagnose diseases and recommend treatments would harm the patient-provider relationship. And, 60% of U.S. consumers would be uncomfortable with a provider relying on AI in their health care.

“Americans see the upsides of AI in their healthcare but need more time to become comfortable with the concept,” the PYMNTS report out on the research noted.

![]()

This is an argument for doctors and patients to both have a say in shaping GenAI’s role in clinical settings, PYMNTS Intelligence and AI-ID recommend due to “fear of the unknown” felt by both sides of the shared decision-making relationship.

Patients tend to trust their personal physicians — where that clinical trust-equity can be leveraged in the patient-physician relationship. On the patients side,

“[They] must be part of the equation because, after all, it is their health that is at stake. Their fears, comfort levels, trust and consent are factors in determining whether and to what extent generative AI should be involved in their treatment,” the report explained.

Health Populi’s Hot Points: Earlier this year, the Pew Research Center published data updating Americans’ comfort with providers relying on AI for their own health care.

In consumer interviews conducted in December 2022, Pew found that 60% of U.S. patients were uncomfortable if their health care provider relied on AI for their medical care. Patients were split between whether AI would lead to better, worse, or neutral health outcomes, shown in the lower pie chart from the study.

Americans tend to be more concerned that health care providers will adopt AI technologies too fast versus too slowly before fully understanding the risks for patients versus the benefits for adopting AI.

Pew found that only 38% of Americans thought the use of AI in health care would improve patient outcomes — with positive opinions on the use of AI to bolster outcomes more commonly held among U.S. adults with greater education (college and post-grad), more men than women, and more likely to be younger (ages 18-29) than people 50 years of age and older.

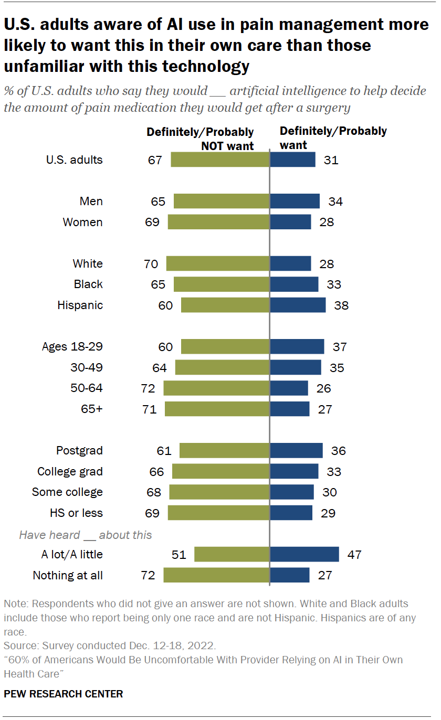

As to the specific medical condition of pain, the bar chart here illustrates the demographic differences of U.S. consumers who would be more likely to want AI to help determine pain management approaches: more likely, patients who had heard a lot about AI would want the technology to be used in their pain management, along with younger people under 50, college grads and post-grads, and more likely to be Hispanic than White by a difference of 10 percentage points.

The World Health Organization offered guidance on core ethical principles to consider for designing, developing, and deploying AI for health care.

![]()

These principles addressed,

- Protecting autonomy

- Promoting human well-being, human safety, and the public interest

- Ensuring transparency, explainability, and intelligibility

- Fostering responsibility and accountability

- Ensuring inclusiveness and equity, and,

- Promoting AI that is responsive and sustainable.

Ultimately, the PYMNTS Intelligence + AI-ID study concluded, “society needs to be ready for and comfortable with the involvement of generative AI in managing patient health and treatment.”

As we work to “ensure inclusiveness” as recommended in the WHO pillars, the Patient must be included in the health care ecosystem’s design and implementation of AI baked into medical care.

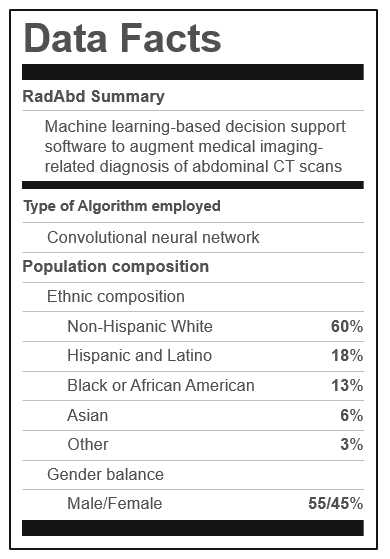

And to promote AI+health literacy, consider the utility and benefit of using a “Data Facts” label akin to the one shown here. Read more about John Halamka’s and others’ concept for a “nutrition label” that communicates algorithmic transparency…

Interviewed live on BNN Bloomberg (Canada) on the market for GLP-1 drugs for weight loss and their impact on both the health care system and consumer goods and services -- notably, food, nutrition, retail health, gyms, and other sectors.

Interviewed live on BNN Bloomberg (Canada) on the market for GLP-1 drugs for weight loss and their impact on both the health care system and consumer goods and services -- notably, food, nutrition, retail health, gyms, and other sectors. Thank you, Feedspot, for

Thank you, Feedspot, for  As you may know, I have been splitting work- and living-time between the U.S. and the E.U., most recently living in and working from Brussels. In the month of September 2024, I'll be splitting time between London and other parts of the U.K., and Italy where I'll be working with clients on consumer health, self-care and home care focused on food-as-medicine, digital health, business and scenario planning for the future...

As you may know, I have been splitting work- and living-time between the U.S. and the E.U., most recently living in and working from Brussels. In the month of September 2024, I'll be splitting time between London and other parts of the U.K., and Italy where I'll be working with clients on consumer health, self-care and home care focused on food-as-medicine, digital health, business and scenario planning for the future...