“Generative AI already has the power to alleviate some of providers’ biggest woes, which include rising costs and high inflation, clinician shortages, and physician burnout,” Bain & Company observe in Beyond Hype: Getting the Most Out of Generative AI in Healthcare Today.

By the end of this paper, the Bain team expects that those health care leaders who are investing in generative AI today (to start small, to win big in the report’s words) will learn key lessons that will put them “on a path to eventually revolutionize their businesses.”

Bain & Company surveyed U.S. health system leaders to gauge their perspectives on AI in light of healthcare’s current challenges such as negative margins, rising costs for labor and supplies, and clinician burnout.

While most health care leaders believe generative AI can re-shape health care, only 6% have put in place a strategy to embed generative AI (abbreviated here as GPT) in their operations.

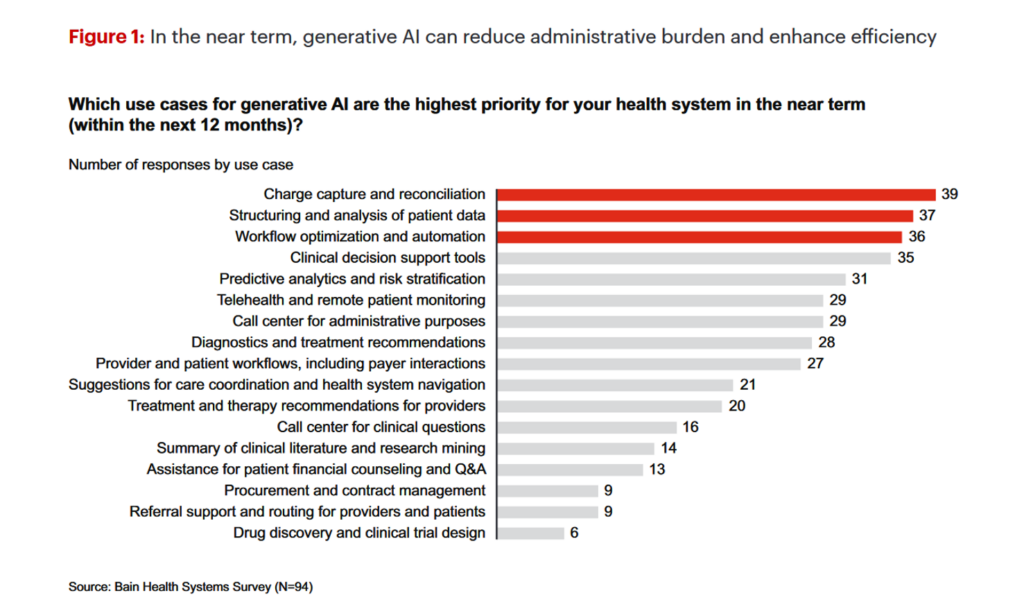

In the short-term next year, health system leaders prioritize the most common use cases for GPT will be charge capture and reconciliation, structuring and analysis of patient data, workflow optimization and automation, and clinical decision support tools.

These priorities are clearly focused on reducing administrative burdens and bolstering operational efficiencies.

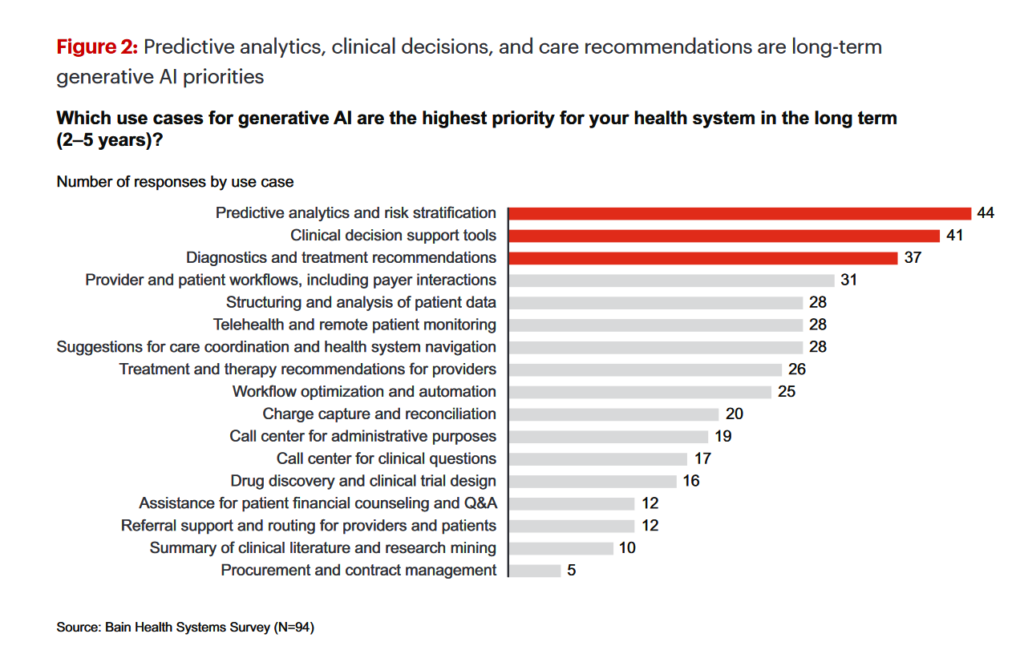

Looking 2-3 years out to the longer term, GPR priorities shift more to care and provider workflows, with predictive analytics and managing risk, clinical decision support, diagnostics and treatment recommendations, and provider and patient workflows (including payer links) rank higher than the financial/administrative priorities identified in the immediate term.

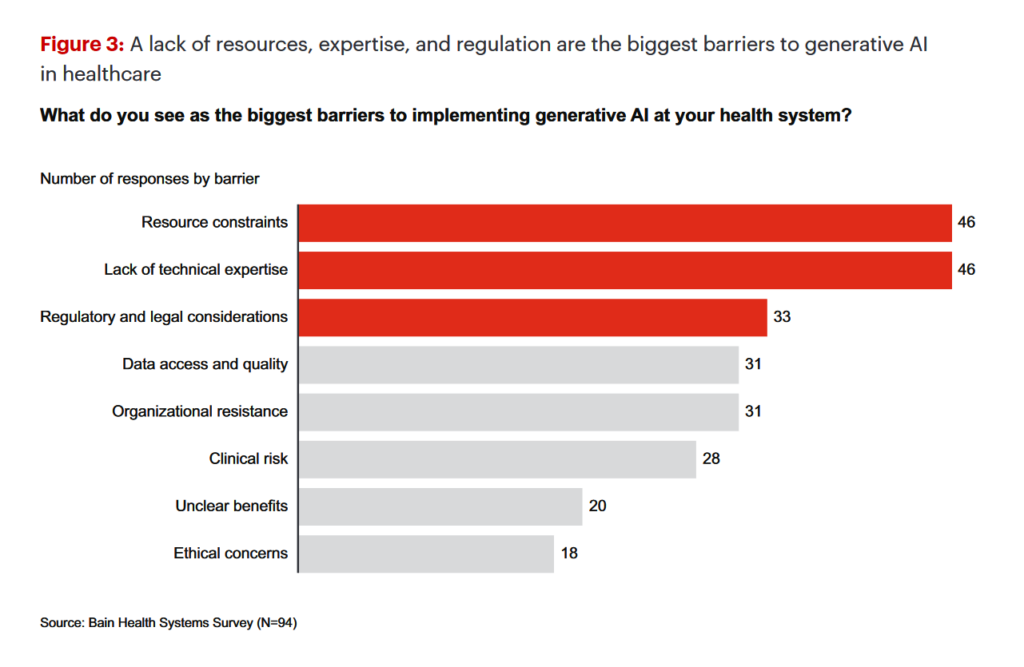

Getting to GPT Nirvana will require overcoming some critical obstacles, two ranking at the top of the barrier-list: resource constraints and lack of technical expertise, followed by regulation and legal issues.

Bain & Co. identify four principles to help deal with these barriers and risks:

- Pilot low-risk applications, initially with a narrow focus. Doing so can mitigate regulatory and legal risk, and also amp-up technical expertise through experience and low-hanging fruit implementations.

- Decide to buy, partner or build, considering different avenues to accomplishing goals and proving out use cases by allying with collaborators or buying expertise.

- Funnel cost savings and experience into bigger bets — that is, channel the financial benefits of early GPT adoption to bridge riskier and larger scale programs. Finally,

- “Remember that generative AI isn’t a strategy unto itself,” the Bain team asserts. The tool isn’t the end-game: GPT is an enabling technology and should be deployed with the desired use cases and objectives in mind.

Health Populi’s Hot Points: The Bain study focuses on the health care providers’ “supply side” of AI, which would inform health care clinician workflows, administrators’ financial and capital planning, and researchers’ analytics in clinical trials and basic science work.

As we are all health care, all the time here at Health Populi, we have to explore the consumer/demand side for AI in terms of what patients, caregivers, and health citizens think about AI in this relatively nascent era — albeit fast-moving when we consider the Bain research defines the “long term” as 3 years.

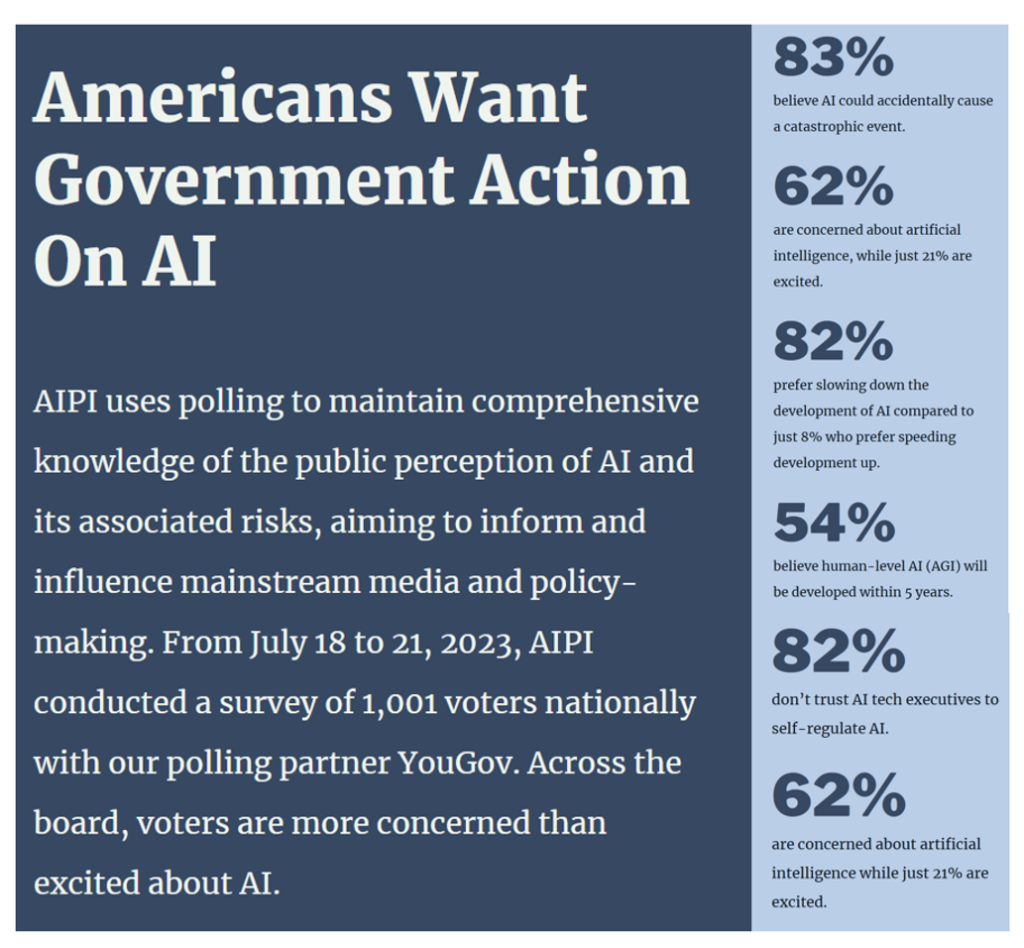

Today, new consumer research was published from the AI Policy Institute (AIPI) which shows that voters are more concerned than excited about AI.

As the summary graphic from the survey results tells us, 62% of U.S. consumers are concerned about AI while only 21% are “excited.”

That concern underpins the data point that 82% of people prefer slowing down the development of AI compared to only 8% who would like to see AI development sped up.

Thus, 82% of people do not trust AI tech executives to self-regulate AI.

Trust is a crucial ingredient in a patient’s health care engagement — especially powerful in her collaboration with physicians, nurses, and pharmacists.

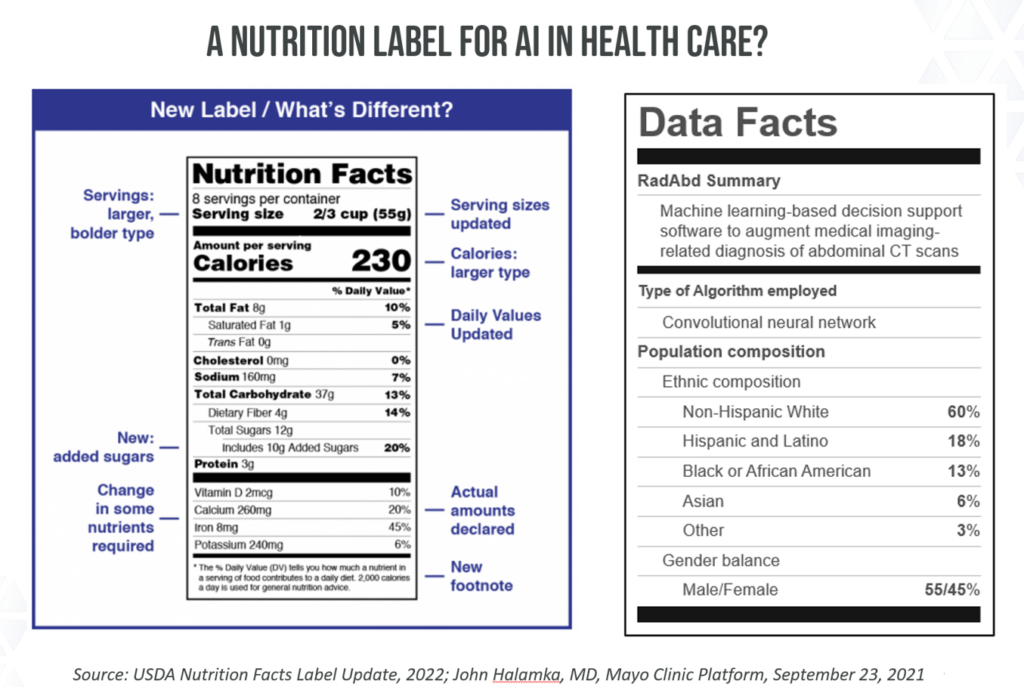

As AI becomes embedded in health care decision making — and particularly clinical care at the point of patients and clinicians — it will be important for health citizens to understand how CPT is playing into the recommendations they receive from providers, payors, and other touchpoints in peoples’ personal health/care ecosystems. John Halamka’s AI “nutrition label” could be a useful tool for communicating the “AI/GPT inside” various clinical recommendations studies, and other information provided to patients.

In an interview this month, John talked about GPT, saying, “I think where we are with generative AI is it’s not transparent, it’s not consistent, and it’s not reliable yet. So we have to be a little bit careful with the use cases we choose.”

Thank you,

Thank you,  As a proud Big Ten alum, I'm thrilled to be invited to

As a proud Big Ten alum, I'm thrilled to be invited to  I was invited to be a Judge for the upcoming CES 2025 Innovation Awards in the category of digital health and connected fitness. So grateful to be part of this annual effort to identify the best in consumer-facing health solutions for self-care, condition management, and family well-being. Thank you, CTA!

I was invited to be a Judge for the upcoming CES 2025 Innovation Awards in the category of digital health and connected fitness. So grateful to be part of this annual effort to identify the best in consumer-facing health solutions for self-care, condition management, and family well-being. Thank you, CTA!